Thrill YouTube Tutorial: High-Performance Algorithmic Distributed Computing with C++

Posted on 2020-06-01 11:13 by Timo Bingmann at Permlink with 0 Comments. Tags: #talk #university #thrill

This post announces the completion of my new tutorial presentation and YouTube video: "Thrill Tutorial: High-Performance Algorithmic Distributed Computing with C++".

YouTube video link: https://youtu.be/UxW5YyETLXo (2h 41min)

Slide Deck: slides-20200601-thrill-tutorial.pdf (21.3 MiB) (114 slides)

In this tutorial we present our new distributed Big Data processing framework called Thrill (https://project-thrill.org). It is a C++ framework consisting of a set of basic scalable algorithmic primitives like mapping, reducing, sorting, merging, joining, and additional MPI-like collectives. This set of primitives can be combined into larger more complex algorithms, such as WordCount, PageRank, and suffix sorting. Such compounded algorithms can then be run on very large inputs using a distributed computing cluster with external memory.

After introducing the audience to Thrill we guide participants through the initial steps of downloading and compiling the software package. The tutorial then continues to give an overview of the challenges of programming real distributed machines and models and frameworks for achieving this goal. With these foundations, Thrill's DIA programming model is introduced with an extensive listing of DIA operations and how to actually use them. The participants are then given a set of small example tasks to gain hands-on experience with DIAs.

After the hands-on session, the tutorial continues with more details on how to run Thrill programs on clusters and how to generate execution profiles. Then, deeper details of Thrill's internal software layers are discussed to advance the participants' mental model of how Thrill executes DIA operations. The final hands-on tutorial is designed as a concerted group effort to implement K-means clustering for 2D points.

The video on YouTube (https://youtu.be/UxW5YyETLXo) contains both presentation and live-coding sessions. It has a high information density and covers many topics.

Table of Contents

- Thrill Motivation Pitch

- Introduction to Parallel Machines

- 0:20:20 The Real Deal: Examples of Machines

- 0:24:50 Networks: Types and Measurements

- 0:31:47 Models

- 0:35:58 Implementations and Frameworks

- The Thrill Framework

- 0:39:28 Thrill's DIA Abstraction and List of Operations

- 0:44:00 Illustrations of DIA Operations

- 0:55:32 Tutorial: Playing with DIA Operations

- 1:08:21 Execution of Collective Operations in Thrill

- 1:21:10 Tutorial: Running Thrill on a Cluster

- 1:27:02 Tutorial: Logging and Profiling

- 1:32:14 Going Deeper into Thrill

- 1:32:49 Layers of Thrill

- 1:37:59 File - Variable-Length C++ Item Store

- 1:43:54 Readers and Writers

- 1:46:43 Thrill's Communication Abstraction

- 1:48:07 Stream - Async Big Data All-to-All

- 1:49:46 Thrill's Data Processing Pipelines

- 1:51:29 Thrill's Current Sample Sort

- 1:54:14 Optimization: Consume and Keep

- 1:59:34 Memory Allocation Areas in Thrill

- 2:01:17 Memory Distribution in Stages

- 2:02:22 Pipelined Data Flow Processing

- 2:08:48 ReduceByKey Implementation

- 2:12:06 Tutorial: First Steps towards k-Means

- 2:35:56 Conclusion

- 0:39:28 Thrill's DIA Abstraction and List of Operations

Transcript

1 - Thrill Tutorial Title Slide

Hello and welcome to this recorded tutorial on Thrill, which is our high-performance algorithmic distributed computation framework in C++. It is both flexible and a general purpose framework to implement distributed algorithms. In a sense it is similar to Apache Spark or Apache Flink, but at the same time brings back designs from MPI and focuses on high performance.

My name is Timo Bingmann and I was one of the initial core developers of Thrill. Thrill's design was part of my PhD thesis but it originally started as a one-year lab course with six master students and three PhD candidates at the Karlsruhe Institute of Technology in Karlsruhe, Germany.

This tutorial covers Thrill in the version available at the start of June 2020.

2 - Abstract

This is just a slide with an abstract and a Creative Commons license for this slide deck.

3 - Table of Contents

This whole tutorial is rather long and covers many aspects of Thrill, from a high-level beginners tutorial down to technical details of some of the distributed algorithms.

I have put video timestamps on this table of contents slide such that it is easier to jump to the sections you are interested in, and such that you can also see how long each section is. In the YouTube description there are of course also links to the various subsections.

In Part 1 I will try to get you interested in Thrill by presenting you some benchmarks, discussing the framework's general design goals, showing you an actual WordCount implementation, and then demonstrating how easy it is to download, compile, and run a simple "Hello World" program.

Part 2 is then about knowledge on parallel machines and distributed computing. We all know parallel programming is hard, ... but it is even harder, or downright impossible, if you know too little about the machines you are programming. And since there is a wide variety of parallel machines and interconnection networks, we will first cover those and then look at models and frameworks which make programming them easier. This part lays the groundwork for better understanding the challenges we try to tackle with Thrill.

And Part 3 is then the main introduction into Thrill.

It starts with the DIA model: the distributed immutable array, which is the high-level concept used in Thrill to program arbitrarily complex algorithms by composing them out of primitive operations such as Map, Sort, Reduce, Zip, and many others.

This first section of Part 3 then continues by giving you a high-level picture of each of these operations. The illustrations on these slides in the chapter "Thrill's DIA Abstraction and List of Operations" are also a sort of reference of what DIA operations do and which are available.

Immediately after the DIA operations catalog, the tutorial part continues with tips on how to actually write code, when you know how you want to compose the DIA primitives. The tutorial section finishes with a hands-on live demonstration of how to write a few simple Thrill programs.

Once you are convinced that Thrill is actually a quite versatile framework, I will show you how to run Thrill programs on clusters of machines, and then how to use the built-in logging and profiling features of Thrill to get a better idea of what is actually happening.

The following section "Going Deeper into Thrill" then drops all high-level abstraction and dives right down into some of the technical details and highlights of the framework. This subsection is for people interested in extending Thrill or writing new DIA operations, but it is also good to consider if you run into unexpected problems, because these can be often resolved by knowing more about the underlying implementation details.

The last tutorial section is then a hands-on live-coding demonstration of how to implement K-means in Thrill.

And the conclusion then wraps up the entire presentation and discusses some future challenges and trajectories.

4 - Thrill Motivation Pitch

Okay, on to the first part, in which I will try to get you interested in Thrill.

5 - Weak-Scaling Benchmarks

Instead of immediately starting with details on Thrill, I will now show you some benchmarks comparing it against Apache Spark and Apache Flink.

The next slide will show weak-scaling results on the following four microbenchmarks: WordCount, PageRank, TeraSort, and K-Means.

WordCount is a simple distributed reduction on strings from text files to count all distinct words, in this case we used parts of the CommonCrawl web corpus as input.

PageRank is actually sparse matrix-vector multiplication in disguise. It requires a join-by-index of the current ranks with outgoing links and performs exactly ten iterations over the data.

TeraSort is, well, sorting of 100 byte random records.

And K-Means is a simple machine learning algorithm which iteratively improves cluster points by calculating new centers. Again, we run exactly ten iterations of the algorithm.

We implemented all these benchmarks in Thrill, Spark, and Flink. Most often these are included in the "examples" directory of each framework. We took great care that the implementations perform the same basic algorithmic steps in each framework, such that every framework is actually doing the same basic computation.

Here on the side of this slide are also the number of lines of code needed to implement these benchmarks in Thrill.

The benchmarks were done on a cluster of relatively beefy machines in the Amazon EC2 cloud. These machines had 32 cores, lots of RAM, local SSDs, and a virtualized network with up to 1 gigabyte throughput.

6 - Experimental Results: Slowdowns

And here are the results.

What is plotted here are showdown results, with the slowdown relative to the fastest framework on the y-axis. And you will immediately see that Thrill is always the fastest framework, so these slowdowns are actually relative to Thrill.

For WordCount, Spark is something like a factor 2.5 slower on 16 machines. Remember that each of these machines had 32 cores, so that is actually 512 cores here. Flink is about a factor 4 slower on WordCount, here.

For PageRank, Thrill starts out strong, but the speedup drops with more machines, probably because the network becomes the bottleneck. With 16 machines, Thrill is about twice as fast as Flink, but still a factor of 4.5 faster than Spark.

For sorting, Thrill is only a factor 1.4 faster than Flink, and a factor 1.8 than Spark.

But for K-Means the difference is large again: Thrill is a factor of 5 faster than Spark with Scala, a factor 13 faster than Spark with Java, and a factor of more than 60 faster than Flink.

And how should we interpret these results?

Well, for me it appears that Thrill really shines when performing calculations on relatively small items. That is why K-Means is so fast: these are just 3-dimensional vectors, which are scanned over and added together. In C++ this is a fast binary code hardware calculation, while with Java and Scala there is a considerable overhead due to the JVM. And in particular Java appears to be less efficient than Scala, probably because Scala has better support for primitive types.

PageRank is similar: vectors of edge ids are processed and ranks are added together. But there is a lot more data transmitted between machines than in the K-Means benchmark.

In the WordCount example we can see how much faster reduction of strings using hash tables is in C++ than whatever aggregation method Spark and Flink use. I believe the JVM garbage collection also plays a large role in these results.

And for Sorting, well, I believe all frameworks could do considerably better. Thrill's sorting can definitely be improved. On the other hand, these are relatively large 100 byte records, which means that the JVM overhead is less pronounced.

I hope I now got you interested in Thrill, and we'll go on to discuss some of the goals which guided much of its design.

7 - Experimental Results: Throughput

This next slide contains the absolute throughput of these experiments, mainly for reference, and we're going to skip these for now.

8 - Example T = [tobeornottobe$]

So for me, the story of Thrill starts with suffix sorting. In my PhD I was tasked to engineer a parallel distributed suffix array construction algorithm.

The suffix array of a text is simply the indexes of all suffixes in lexicographic order. And here you can see the suffix array of the text "tobeornottobe$".

Many will not know this data structure, but it is a fundamental ingredient to text search algorithms, data compression, bioinformatics, and stringology algorithms.

9 - bwUniCluster

And you really want to construct this data structure for very large inputs. And that is why we considered how to use these clusters of machines in the basement of our computing center at KIT for suffix sorting.

10 - Big Data Batch Processing

But to use them, you need to select a framework to build on. And this is how the world presented itself to us back them:

On the one hand there is MPI, which is incredibly fast and still used by most software on supercomputers, but at the same time MPI is incredibly difficult to use correctly, which anyone who has programmed with MPI can attest to.

On the other side, there is MapReduce and Hadoop, which maybe has the simplest possible interface consisting of just two functions: map and reduce, well yes, and possibly complex partitioners and aggregators, but still, it's quite simple. The problem is it is also notoriously slow.

Somewhat better are the two frameworks Apache Spark and Apache Flink. They have a reasonably more complex interface, for example they allow you sorting and other flexible operations, but still they are programmed in Java or Scala and are also known to be not as fast as MPI on supercomputers.

And when you see the world like this, what do you do? You try to fill the blank spot in the upper right corner. And this is our attempt with Thrill. And these are the list of requirements we had.

Many come from previous experience with implementing STXXL, a library of external memory algorithms. And other goals came from the suffix array construction use case, which I mentioned previously. While this use case is very specific, I believe our goals formulated from it are universally important.

The objective was to have a relatively small toolkit of well-implemented distributed algorithms, such as sorting, reduction, merging, zipping, windows, and many others. And to be able to combine or compound them efficiently into larger algorithms without losing too much performance.

The framework should work well with small items, for example single characters or integers as items, which is needed for the suffix array construction.

And use external disk memory automatically when needed. And to overlap computation and communication, something that MPI is pretty bad at. And also to overlap external disk I/O if that is needed. These computation overlapping ideas, using asynchronous communication and I/O stem from STXXL's design.

And last but not least Thrill should be in C++ to utilize compile-time optimization.

So we had really high goals. And it turned out that much work was concentrated on what I call the catacombs, or the lower layers of Thrill, which were needed to build up from MPI or another communication layer.

11 - Thrill's Design Goals

This next slide contains the same goals which I just explained on the previous slide.

Overarching main goal was to have an easy way to program relatively complex distributed algorithms using C++.

Thrill is obviously a moving target. It may change, and this tutorial was recorded in June 2020.

12 - Thrill's Goal and Current Status

So what is the current status of Thrill.

We have a well-working implementation, which I however still consider a prototype, because the interface can change between releases. It has about 60K lines of relatively advanced C++ code, written by more than two dozen developers over three years.

We published a paper describing the ideas and prototype of Thrill in 2016 at the IEEE Conference on Big Data in Washington D.C. That paper contains the microbenchmarks I showed in the first slides.

Since then, we published a paper of distributed suffix array construction in 2018, also at the IEEE Conference on Big Data, this time in Seattle, Washington. And another group at KIT implemented Louvain graph clustering and presented it at Euro-Par in 2018. There was a poster at the Supercomputing Conference in 2018. And we have many more examples in Thrill's repository, like gradient descent and triangle counting.

And for the future, the main challenges are to introduce fault tolerance, which we know how to do it in theory, but someone has to actually do it in practice.

Real algorithmic research needs to be done on scalability of the underlying "catacomb" layers to millions of machines, it is unclear how to do that well in practice.

And what I think is very interesting is to add "predictability", where one wants to run an algorithm with 1000 items, and then to extrapolate that and predict how long it will take on a million items.

And maybe another challenge not listed here is to productize Thrill, which would mean to add convenience and interfaces to other software.

But more about future work in the final section of this talk.

13 - Example: WordCount in Thrill

And this is what WordCount looks like in Thrill. It is just 20 lines of code, excluding the main caller program.

This is actual running code, and it contains lots of lambdas and dot-chaining to compound the primitives together. We will take a closer look at the code later in this tutorial, once I explained more about DIAs and the operations.

But for now WordCount is composed out of only five operations: ReadLines, FlatMap, ReduceByKey, Map, and WriteLines.

14 - DC3 Data-Flow Graph with Recursion

And this is a diagram of the suffix sorting algorithm, I alluded to earlier. This is just supposed to be a demonstration that even complex algorithms can be composed from these primitives. It contains 21 operations and is recursive.

15 - Tutorial: Clone, Compile, and Run Hello World

So let me show you a live demonstration of Thrill.

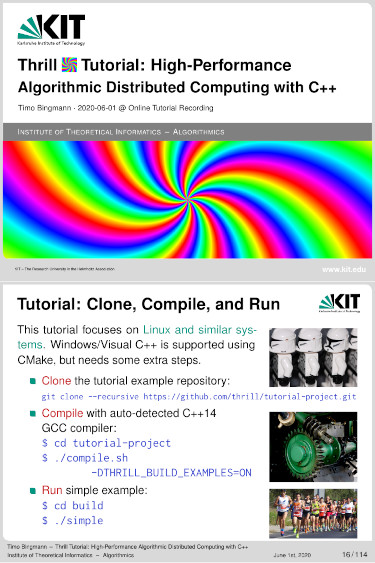

16 - Tutorial: Clone, Compile, and Run

This is an instructions slide on how to clone an simple "Hello World" repository, which contains Thrill has a git submodule, and how to compile it with cmake and then how to run the simple example program.

I will now do the steps live such that you can actually see it working, and we'll extend the example program later on in this tutorial.

Okay, let me switch to a console and let me clone the git repository from Github. And now it's downloading this tutorial project, and its downloading Thrill and all of the dependencies recursively, which are git submodules.

And the repository contains a small shell script to make compiling easier. Once its downloaded. You can just run it like that, but we're also going to build the examples; to build the example we have to add this flag, because I'm to run the example later on. And it detects the compiler and then starts building Thrill, including of all the examples.

Okay, the compilation of the examples does take some time. In the meanwhile we're going to look at the code of the simple program.

17 - Tutorial: Run Hello World

And this is the code of the "Hello World" program.

But before we get to the code, I somehow really like the photo of the hello world baby on this slide. I don't know why, it's just awesome on some many levels, I just love it.

Okay, sorry, ehm, so you first include the "thrill/thrill.hpp", which is a straight-forward way to include everything from Thrill.

And to better understand this Hello World program, there are two concepts needed to get you started: there is the thrill::Context class, and the thrill::Run() method.

Each thread or worker in Thrill has its own Context object. And the Context object is needed to create DIAs and many other things: and in this example to get the worker rank by calling my_rank(). The Context is, well, the context of the worker thread.

And to get a Context, you have to use thrill::Run(), which is a magic function which detects the environment the program is running on, whether it is launched with MPI or standalone, it detects the number of threads and hosts, it builds up a communication infrastructure, etc etc.

In the end, it launches a number of threads and runs a function. And that function in this case is called "program". And what "program" does here is simply to print "Hello World" and the worker rank.

18 - Control Model: Spark vs MPI/Thrill

Okay. To better understand what happens here, we'll skip ahead to this comparison of the control model of Thrill or MPI and Spark.

Apache Spark has a hierarchical control model. There is the driver, which contains the main program. A cluster manager which runs continuously on the system, and an executor which is launched by the cluster manager and then deals out work to its actual worker threads.

Thrill and MPI work differently: All workers are launched in the beginning, and live until the program finishes. Data is passed between the threads by sending messages.

And this means that all threads can collectively coordinate because they can implicitly know what the other threads are currently doing and which messages they can expect to receive next.

19 - Tutorial: Hello World Output

Okay, so lets have a look if the compilation has finished... Yes it has. And let's run the simple program, which is in the build directory here and called simple.

So this is the output of the simple program.

I will switch back to the slides to explain the output, mainly because they are colored.

So there is a bunch of output here: the first few lines start with "Thrill:" messages and inform you about the parameters Thrill detected in the system. It says it runs "2 test hosts" with "4 workers per host", and each virtual test host has a bit less than 8 GB of RAM, because this machine has 16 GB in total. And it uses a "local tcp network" for communication.

Then there is some details about the external memory, which we're going to skip for now.

And then we can see the actual output of our "Hello World" program. And of course the worker ranks, which are going to be in a random order.

And then the program finishes, and Thrill prints a final message with lots of statistics. It ran, well, a few microseconds, had 0 bytes in DIAs, did 0 bytes of network traffic, and so on. I'll also skip the last "malloc_tracker" line.

Okay, so far so good we have a simple "Hello World" Thrill program.

20 - Introduction to Parallel Machines

In the next section, we are going to look at some real parallel machines, and then discuss the challenges that we face when programming these.

21 - The Real Deal: HPC Supercomputers

So the biggest supercomputer in the world is currently the Summit system at Oak Ridge National Laboratory in the United States. It has 4356 nodes with 44 cores each, in total a bit over 200k CPUs. These CPUs use the rather exotic Power9 instruction set from IBM. But the real deal is that each node also contain six NVIDIA GPUs, which sum up to 2.2 million streaming multiprocessors (SMs).

As you may expect, this system is used for massive simulations in physics or chemistry, and maybe also for training neural networks and such.

22 - The Real Deal: HPC Supercomputers

The biggest system in Germany is the SuperMUC-NG at the Leibniz Rechenzentrum in Munich. Its the successor of the SuperMUC, and currently number 9 in the TOP500 list.

It is a more traditional supercomputer without GPU cards. It has 6336 nodes and 24 physical Intel Xeon cores per node, which is about 150k physical cores. These are connected with an Intel Omni-Path network, which is actually kind of exotic.

Oh right, I forgot the network on the Summit is a Mellanox EDR InfiniBand network, which is a lot more common in supercomputers than the Intel Omni-Path.

But they both allow remote direct memory access (RDMA) which is the "standard" communication method in supercomputers. It is also noteworthy that both systems don't have local disks.

23 - The Real Deal: HPC Supercomputers

So, we're getting smaller now. This is the biggest machine available at KIT, the ForHLR II, which is short for, well, Forschungshochleistungsrechner II.

So the ForHLR II has 1152 nodes and 20 physical cores per node, in total 23k physical cores. These are connected with a EDR InfiniBand network, and each has a 480 GB local SSDs.

24 - The Real Deal: Cloud Computing

Right, these were all supercomputers for scientific computing. But the industry uses "the Cloud".

Not much is known about the actual hardware of the big cloud computing providers, because they employ virtualization systems.

The available virtual systems range from very small ones to large ones, and these are two examples of large instances on the AWS cloud.

The first has 48 cores and 192 GB of RAM and they are connected by 10 Gigabit virtual Ethernet network.

The second has 32 cores, about 250 GB of RAM, 10 Gigabit network, and additionally 4 local 1.9 TB NVMe disks.

My own experience with performance on AWS has been very positive. Despite the "virtualized" hardware, the benchmark results that I got were very stable. The virtual CPUs compute very similar to real ones, and the 10 Gigabit network actually delivers about 1 Gigabyte/sec throughput.

25 - The Real Deal: Custom Local Clusters

And then there are "real" server installations, which often contain many different systems, acquired over time, which makes them highly heterogeneous server installations.

And of course one can build "toy" clusters of Raspberry Pis, which are homogeneous, but have relatively small computer power per node.

26 - The Real Deal: Shared Memory

But there are also even smaller distributed machines: every shared memory parallel machine can be seen as a small distributed system, because they also have a communication protocol.

The protocol is the cache coherence protocol, which runs over QPI links between the sockets and processors.

Cache coherence is actually an implicit communication system, were writing to memory becomes "automatically" visible to the other cores.

But of course the details of cache coherence are much more complicated.

27 - The Real Deal: GPUs

And parallel computing is of course taken by GPUs to a whole new level:

Each of the 80 streaming multiprocessors on a NVIDIA Tesla V100 can perform operations in lock-step with 64 CUDA cores.

They share an L2 cache and communicate with the outside via NVLink, which is similar to the QPI links between shared-memory processors.

28 - Networks: Types and Measurements

Okay, that was a sort of the overview of parallel systems available these days.

Depending on the application, it is of course very important to pick the right system.

(skip back pages)

Thrill is currently design for small clusters of homogeneous systems, like smaller supercomputers, possibly with disks.

But it also works well with shared memory systems, because these actually have a very similar characteristic, as I was alluding to before.

And Thrill is designed for implementing complex algorithms, which are not embarrassingly parallel and thus easy to schedule via batch jobs.

But now let's look into more details of the communication networks, which are of prime importance for parallel computing.

29 - Types of Networks

I believe there are three major communication networks currently used in large computing systems.

The first is RDMA-based, which allows remote direct memory access. That means a node in the network can read or write the memory of a remote node directly without additional processing done by the remote CPU. This is possible via the memory access channels on the PCI bus. Of course there is also a privilege system in place, which means the remote system must have been granted access to the particular memory area before.

RDMA is implemented by various vendors for supercomputers, such an Mellanox's InfiniBand, or Intel's Omnipath, or Cray's Aries network.

There are also different network topologies, which limits the various aggregated bandwidths between hosts.

The second type is TCP/IP or UDP/IP based, which is what the Internet is built on. The IP frames are usual transported via Ethernet, or whatever virtualization of Ethernet is used in cloud computing environments. The various web protocols like HTTP, or SMTP are built on TCP.

And the third type, that is often overlooked, are the internal networks between sockets and CPUs in shared-memory systems. These provide the implicit communication among cores in a multi-core, many-core, or GPU system.

So these were the three types.

30 - Round Trip Time (RTT) and Bandwidth

To compare Ethernet and InfiniBand on some real systems, I performed the following three small experiments.

The first is to measure round-trip time by ping-pong message. One machine sends a ping, and the receiver answers with a pong. The round-trip time is measured by the sender.

Let's immediately look at the results from these four systems that I tested. The first is a standard Ethernet local area network (LAN) at our research group. The second and third are virtualized 10 Gigabit Ethernet on the AWS cloud. And the fourth an RDMA InfiniBand network at our local computing cluster.

As you can see, the round-trip time in our local Ethernet is slowest, about 140 microseconds. The first AWS instance is about 100 microseconds, and the second about 80. But InfiniBand is then about an order of magnitude faster than the TCP/IP-based solutions.

Now let's look at the second experiment: Sync Send.

In this experiment one machine sends a continuous stream of messages to one other machine. And we simply measure the achieved bandwidth.

Our local Ethernet nearly reaches the 1 Gigabyte/sec mark. The first AWS system is rather disappointing, with 390 Megabyte/sec, but the second is much better with 1140 Megabyte/sec. The second probably has newer virtualized hardware. But again, InfiniBand reaches nearly 6 Gigabyte/sec is this scenario.

The last experiment is ASync Send: where everyone sends to everyone else in the same system in a randomized fashion. I don't have results from the first two systems for this experiment, because I designed the third scenario later.

On the second AWS system, the total asynchronous bandwidth is around 4.3 Gigabyte/sec and on the InfiniBand cluster about 5.6 Gigabyte/sec.

So, what have we learned?

To summarize, AWS's newer virtual Ethernet performs just as good or better than real Ethernet.

But InfiniBand in supercomputer clusters is considerably better than Ethernet: the point-to-point throughput is more than a factor 5 higher, and the latency a factor 8 lower.

31 - MPI Random Async Block Benchmark

So since we now know that InfiniBand is really a lot better, I am going to present more results showing how to maximize the performance when using InfiniBand.

This following experiment is identical to the ASync Send from the previous slide: it uses MPI and transmits blocks of size B between a number of hosts in a randomized fashion.

Each host has a fixed number of send or receive operations active at any time. In the figure there are exactly four.

The transmission pattern is determined by a globally synchronized random number generator. This way, all hosts know the pattern without having to communicate, and they can easily calculate whether to send or receive a block and which partner to communicate with in each step.

At the same time the pattern is unpredictable and really tests the network's switching capacity.

There are two parameters which are interesting to vary: the block size and the number of simultaneously active requests.

32 - Random Blocks on ForHLR II, 8 Hosts

And these are the results on the ForHLR II system with eight InfiniBand hosts.

I plotted the achieved bandwidth versus the transmitted block size, and the various series in the plot are different numbers of simultaneous requests.

One can clearly see that with a single issued send or receive request, which is the red line, the network can in no way be saturated. It takes 64 simultaneous requests or more to get near the full transmission capacity of the InfiniBand network.

And then you also need larger blocks in the best case 128 KiB in size.

In summary, it is really, really hard to max out the InfiniBand network's capacity. Too small blocks are bad, but too big transmissions as well. And you really have to issue lots of asynchronous requests simultaneously.

33 - Variety of Parallel Computing Hosts

Okay that brings us to the wrap-up of our tour through parallel computing machines.

And it believe the best summary is a that it resembles a fish market:

The variety of parallel systems is large, some have special CPUs, some have commodity hardware, some have Ethernet, some InfiniBand, some have local disks, some don't, and so on.

And in the end the prices for the computing resources fluctuate daily and exotic systems can demand higher prices.

Well, just like on this fish market in Seattle.

34 - Introduction to Parallel Machines: Models

Before jumping into Thrill it is good to review some more parallel programming concepts and models.

35 - Control Model: Fork-Join

Maybe the most important concept or model is "fork-join".

In fork-join, a thread of execution splits up into multiple parallel threads, which is called a fork. This is a fork. When the multiple threads finish, a join brings the results together, processes them, and then the sequential sequence can continue. Forks and joins can also be done recursively by threads running in parallel.

While this may seem trivial, it is a very good mental model to work with when parallelizing applications. The fork starts multiple independent jobs, thereby splitting up work, and the join combines the results of these jobs.

The challenge is then of course to construct phases in which all parallel tasks run equally long, such that computation resources are efficiently used. This is due to phases taking as long as the longest thread inside them.

36 - Trivially Parallelizable Work

Let's look at the simplest case: when the workload can be split up perfectly into independent equal-sized subtasks, then is it also called trivially parallelizable, or embarrassingly parallel.

Of course, on the other hand, it is not always easy to see when a task is embarrassingly parallel.

In the fork-join model this is just one phase. Of course, things can get messy if the subtasks are not equally long. Then one needs a load balancing system, such as a batch scheduler, which assigns subtasks to processors.

37 - Control Model: Master-Worker

The simplest and most widely used task scheduler is the master-worker paradigm.

In that model, there is a master control program, which has a queue of jobs. It may know a lot about these jobs, such as how long they will take, or what resources they need.

The master then deals out jobs to a fixed number of workers, and while doing so it can schedule the jobs in some intelligent manner.

This model has many advantages, mainly that it is simple, workers can easily be added or removed, and it implicitly balances uneven workloads. It is used by most Big Data computation frameworks.

However, there are also disadvantages. Namely the master is a single point of failure. It is also not truly scalable, because the master has to know everything about all workers and all jobs.

And it may also increase latency, due to the round-trips involved sending jobs to the workers. This may also require transmitting data for the job, and so on.

38 - Control Model: Spark vs. MPI/Thrill

We already considered this slide in the introduction.

Apache Spark follows a master-worker model. There is a driver program which is written by the user and contains RDD operations. This driver program is transmitted to a long-running cluster manager, which acts as a high-level resource scheduler. The cluster manager spawns one or more executors, which manage data and code, and use the master-work paradigm to schedule smaller short-lived jobs.

MPI and Thrill follow a different approach. At launch time, a fixed number of threads is allocated, and they collectively run the program. This model has the advantage of the workers being able to synchronize implicitly.

It however also requires one to think a lot more about how to balance the work, as not to waste processing time.

39 - Bulk Synchronous Parallel (BSP)

A good way to think about that challenge is the Bulk Synchronous Parallel model, or BSP model.

In the BSP model, there are a fixed number of processors, which perform local work. During that work they can send messages to other processors, however these messages are sent asynchronously, which means that they are only available at the destination after a barrier.

The time from one barrier to the next is called a superstep. And one wants to minimize the number of barriers and the wasted time inside a superstep.

It is good to think about Thrill DIA operations as BSP programs. And for many operations, Thrill reaches the minimum number of supersteps possible.

40 - Introduction to Parallel Machines: Implementations and Frameworks

After considering models, we are now going to look at some of the most popular actual frameworks and implementations.

41 - MPI (Message Passing Interface)

MPI definitely belongs into this category, though I would say it is not actually popular; widely-used is a better adjective for MPI.

MPI itself is only a standard defining an interface. There are several different implementations, and also specialized ones for the large supercomputers, where it is still the most used communication interface in applications.

This slide shows some of the most important collective operations. For example Allgather, were each processor contributes one item, and after the operation all processors have received the item from all other processors.

42 - Map/Reduce Model

An entirely different model is the Map/Reduce model, which was popularized by Google in 2004 and initiated an entire new research area.

One programs in Map/Reduce by specifying two functions: map and reduce. Map takes an items and emits zero or more elements. These elements are then partitioned and shuffled by a hash method such that all items with the same key are then processed by one reduce function.

This here is the canonical WordCount example.

43 - Map/Reduce Framework

But what Map/Reduce does differently from MPI and other frameworks is to free the user from having to think about individual processors.

The Map/Reduce framework in some sense is auto-magical. It is supposed to provide automatic parallelization, automatic data distribution and load balancing, and automatic fault tolerance.

On the one hand this makes the model so easy and attractive, on the other hand it makes the work of the framework very, very, very hard. And that is why many Map/Reduce frameworks appear to be slow: they have to guess correctly how to automatically fulfill all these expectations.

44 - Apache Spark and Apache Flink

Then there are the what I call "post"-Map/Reduce frameworks: namely Apache Spark and Apache Flink.

Spark was started in 2009 in the US at Berkeley and is centered around so-called RDDs, and later DataFrames. As said before, it follows a driver-master-worker architecture and has become very popular for many tasks in the industry.

Flink on the other hand was started as Stratosphere at the TU Berlin in Germany by the group around Professor Volker Markl. This group has a strong background in database design and data management, and this shows clearly in Flink's design.

Flink has an optimizer and parallel work scheduler similar to a relational database. But Spark on the other hand has also been adding these components lately.

45 - Flavours of Big Data Frameworks

And then there are many, many other frameworks. This is a slide on which I tried to categorize many of the popular names and frameworks.

Depending on the application it is of course better to use specialized Big Data frameworks, for example for interactive queries, or NoSQL databases, or machine learning.

There is probably no one-size-fits all solution.

In Germany there is a funny expression for that: there is no such thing as an "eierlegende Wollmilchsau", which is this pictured here, a fantasy figur of an egg laying pig with woolen skin that also produces milk.

And Thrill is also not a one-size-fits all solution.

46 - The Thrill Framework: Thrill's DIA Abstraction and List of Operations

And now, after this long introduction into parallel programming and its many challenges, let's dive into the high layer programming interface of our Thrill framework.

47 - Distributed Immutable Array (DIA)

This high layer interface revolves around the concept of a DIA or DIA, a distributed immutable array, which is a conceptional array of C++ items that is distributed transparently onto the cluster in some way.

The framework intentionally hides how the array is distributed, but one can imagine that it is divided equally into the processors in order.

The array can contain C++ objects of basically any type, characters, integers, vectors of integers, structs, and classes, as long as they are serializable.

But you cannot access the items directly, instead you apply transformations to the entire array as a whole. For example, you can apply a Map transformation which can modify each item, or you can Sort the array with some comparison function. Each time the result is a new DIA.

47a - Distributed Immutable Array (DIA)

However, inside the framework these distributed arrays usually don't exist explicitly. Instead a DIA is a chain of operations, which is built lazily and the actual operations are only executed when the user triggers an Action, which must deliver some external result.

Only then does the framework execute the operations necessary to produce that result. The DIAs in the execution are actually only the conceptional "glue" between operations, the operations themselves determine how to store the data. For example Sort, may store the items in some presorted fashion.

47b - Distributed Immutable Array (DIA)

The chaining of operations one can visualize as a graph, which we call DIA data-flow graphs. In these graphs the operations are vertices, and the DIAs are actually the edges going out of operations and into the next.

Now, why have a distributed array and not a distributed set or similar. I believe that becomes more clear when we look at the following excerpt of some of the operations.

48 - List of Primitives (Excerpt)

This is the original short list of important operations, the current Thrill version has many more DIA operations and variations that have been added over time.

There are two basic types of operations: local operations, LOp for short, and distributed operations, called DOps.

LOps cannot perform communication, these are for example Map, Filter, FlatMap, and BernoulliSample. On the other hand DOps can communicate, and they contain at least one BSP model barrier, and there are many different types of DOps.

There is actually even a third kind of "auxiliary" DIA operations, which are used to change and augment the DIA graph, but more about those later.

Here is a short list of DOps: Sort, ReduceByKey, PrefixSum, Zip, Union, and so on. Each of these DOps is implemented as a C++ template class that runs a distributed algorithm on a stream of input items.

There are two special types of DOps: Sources and Actions.

Sources start a DIA chain by reading external data or generating it on-the-fly. Examples of these are Generate, ReadLines, and ReadBinary.

And then there are Actions, like Sum, Min, WriteBinary, or WriteLines, which are also distributed operations, but actually trigger execution of the chain of DIA operations, and write files or deliver a result value on every worker which determines the future program flow.

Now back to the question, why a distributed array and not a distributed set? In the very beginning we looked at a list of operations that we wanted to support. These operations we knew would be possible to implement in a scalable distributed manner, and yes, some of them had a set as output. For example ReduceByKey outputs a set, and Sort takes a set and produces an ordered array.

But in the end, there were so few unordered sets, that we decided it was best to have just one central data structure instead of a complex type algebra. So we simply consider unordered sets as arrays with an arbitrary order! Internally, the items are always stored in some order, so we just take this internal order as the arbitrary array order.

49 - Local Operations (LOps)

And now come a lot of slides with pictures of what the various DIA operations do, because pictures say more than a thousand words.

These are Map, Filter, and FlatMap, which all are local operations.

Map maps each item of type A to an item of type B. Hence, it maps a DIA of type A to a DIA of type B, both of exactly the same size.

Filter identifies items that should be kept or removed using a boolean test function.

And FlatMap can output zero, one, or more items of type B per input of type A. This allows one to expand or contract a DIA.

50 - DOps: ReduceByKey

ReduceByKey takes a DIA of type A and two arguments: a key extractor function and a reduction function.

The key extractor is applied to each item and all items with the same key are reduced using the reduction function.

The keys are delivered in the output DIA in a random order, which is actually determined by the hash function applied internally to the keys.

51 - DOps: GroupByKey

GroupByKey is similar: it takes a DIA of type A, a key extractor function, and a group function.

This time all items with the same key are delivered to the group function in form of an iterable stream or a vector. This however means that all these items have to be collected at the same processor.

Compare this to ReduceByKey, where the reduction can happen in a distributed fashion. So you should always prefer ReduceByKey whenever possible.

52 - DOps: ReduceToIndex

And then there is ReduceToIndex, which is like ReduceByKey but you are allowed to define the order of the items in the output array.

ReduceToIndex takes a DIA of type A and three arguments: an index extractor, a reduction function, and the result size.

The index extractor determines the target index for each item, and all items with the same target index are reduced together into that slot in the output array.

There may of course be empty cells in the result, which are filled with zeros or default constructed items.

And the output size is needed such that the resulting DIA can be distributed evenly among the processors.

Now ReduceToIndex really highlights the use of distributed arrays as a data structures. It is for example used in the PageRank benchmark to reduce incoming weights on a list of vertices.

53 - DOps: GroupToIndex

There is of course also a GroupToIndex variant, which like GroupByKey collects all items with the same key or index and delivers them to a group function as a stream. But like ReduceToIndex you specify the target index of each item instead of a random hash key.

54 - DOps: InnerJoin

There is also an experimental InnerJoin, which takes two DIAs of different types A and B, a key extractor function for each of them, and a join operation.

The key extractors are executed on all items, and all items with keys from A that match with keys from B are delivered to the join function. The output array is again in a random order.

If you are more interested in InnerJoin it is best to read the corresponding master thesis.

55 - DOps: Sort and Merge

As often mentioned before, there is a Sort method. It takes a less comparator, which is the standard way to write order relations in C++.

And there is also a Merge operation, which also takes a less comparator, and two or more presorted DIAs.

56 - DOps: PrefixSum and ExPrefixSum

Because we have an array, we can define a PrefixSum operation, which, well, calculates the prefix sum of the DIA elements. In the C++'s STL the same is called a partial_sum().

There are actually two variants of PrefixSum: the inclusive and the exclusive one, which means the item is either included in the sum at its index, which is inclusive variant, or not, which is the exclusive variant.

57 - Sample (DOp), BernoulliSample (LOp)

There are two Sample operations: A Sample method returning a fixed size result, and a BernoulliSample which takes only the probability of an item surviving, which means the result of BernoulliSample can obviously vary widely.

58 - DOps: Zip and Window

And there are zip functions to combine DIAs. Zip takes two or more DIAs of equal size, aligns them, and delivers all items with the same array index to a so-called zip function, which can process them and outputs a new combined item. There are also variants of Zip to deal with DIAs of unequal size by cutting or padding them.

And since Zip's most common use was to add the array index to each item, we implemented a specialized ZipWithIndex method, that is a bit more optimized that the usual Zip method.

And then there is the Window method. Which is actually quite strange: what Window does is delivers every window of k consecutive items to a function. There are also variants which deliver only all disjoint, meaning non-overlapping windows.

I find Window very strange because it reintroduces the notion of locality on the array, something that all of the previous operations did not actually consider.

59 - Auxiliary Ops: Cache and Collapse

There are two special auxiliary operations: Cache and Collapse. In the technical details section later on, I will explain more about the reasons behind them.

Cache is simple: it actually materializes the DIA data as an array. This is useful for caching, but also vital when generating random data.

Collapse on the other hand is a folding operation, which is needed to fold Map, FlatMap, or other local operations, which Thrill tries to chain or combine together. A DIA is actually a lazy chain of template-optimized operations, here written as f1 and f2. These functions are usually automatically folded into DIAs, but in loops and function return values, it is sometime necessary to fold or collapse them manually into a DIA object. But more about that later.

Cache and Collapse are sometimes hard to distinguish, it is easiest by remembering the cost of these two: The cost of Cache is the size of the entire DIA data that is cached, while the cost of Collapse is only one virtual function call per item, so it is very small.

60 - Source DOps: Generate, -ToDIA

And now for a list of Source operations.

The most basic Source operation to create a DIA is called Generate. Generate, well, it generates a DIA of size n by passing the numbers 0 to n-1 to a generator function which can actually then construct the items. It is often used to generate random input or simply index numbers.

There is also a plain Generate version, which simply creates a DIA containing the numbers 0 to n-1. The two parameter version is identical with the plain Generate followed by a Map.

Generate should however not be used to convert a vector or array into a DIA. For that there are two simpler methods: ConcatToDIA and EqualToDIA. These two operations differ in how the input vector at each worker is viewed.

ConcatToDIA concatenates the vector data at each worker into a long DIA, which is what you want when each worker has one slice of the data.

EqualToDIA on the other hand assumes that the vector data on each worker is identical, and it will cut out that part of the vector which the worker will be responsible for.

61 - Source DOps: ReadLines, ReadBinary

So beyond these three Source operations, there are currently two main input file formats used in Thrill: text files and binary files.

Text files can be read line-wise into a DIA. Each item in the result DIA is a std::string containing a line. The ReadLines operation can also take a list of files, which are then concatenated.

Again, there are two scenarios: one where all Thrill hosts see all the files, for example when they are stored in a distributed file system such as NFS or Lustre.

And another scenario, where each Thrill hosts sees only its local directory of files. This is similar to the difference between ConcatToDIA and EqualToDIA.

The default case is the distributed file system case, because that is what we seen on our supercomputers. If you need to concatenate local files, please lookup the LocalStorage variant of the operations.

So text files are easy and straight-forward, but Thrill will also read pure binary files.

When reading binary files, these are just parsed directly without any format definition or similar. They are simply deserialized by Thrill's internal serializer.

This is most useful to load raw data files containing trivial data types such as integers and other PODs, but it can also be used to reload data objects that has been previously stored by Thrill.

62 - Actions: WriteLines, WriteBinary

There are of course also matching write operations: WriteLines and WriteBinary.

WriteLines takes a DIA of std::strings and writes it to the disk.

WriteBinary takes any DIA and writes it to disk in Thrill's serialization format. This format is trivial for POD data types, and very simple for more complex ones and the written files can be loaded again using ReadBinary.

Now it is important to note that these functions create many files, not just one. Namely each worker writes its portion of the DIA into a separate file, so you get at least as many files as you have workers.

This is not a problem for the corresponding Read methods, because they can read and concatenate a list of files.

And creating many files is obviously the cheapest way to do it in a distributed system. However, in the beginning it may be somewhat surprising and thus I warned you now.

63 - Actions: Size, Print, and more

And then there are Actions. Well, to be precise the WriteLines and WriteBinary operations already were Actions. But this is the overview of many other Actions.

Actions take a DIA and calculate a non-DIA result from it. This triggers the chain of executions necessary to produce that result.

The maybe most important commonly used ones are Size and AllGather. Size just calculates the size of a DIA.

And AllGather collects the entire DIA content on all workers. This is of course a somewhat dangerous operation, and should only be used for small DIAs or for debugging. There is also a Gather operation which collects it only on one worker.

Speaking of debugging. The Print action is invaluable! It simply prints objects using the std::ostream operator. Use it often! I can only repeat: Use it often!

Execute is a helper action, which triggers execution of a DIA chain, but doesn't actually deliver any result.

And then there are the AllReduce methods. As I mention before AllGather is often used, but cannot work on large DIAs. For that you probably want to use AllReduce, or Sum, or Min, or Max, which are actually only AllReduce in disguise.

The AllReduce methods are the best scalable, distributed way to calculate a result. The hard part is determining how to use them to calculate something useful in a larger algorithm.

64 - The Thrill Framework: Tutorial: Playing with DIA Operations

Wow. That was the end of quite a long list of DIA operations.

The previous slides also functions as a kind of visual reference of what operations are available and what they do.

Coding with Thrill often boils down to picking the right DIA operations, parameterizing them in the right way, and then combining them into a larger algorithm.

The DIA operations reference list that we just went through is therefore really valuable for coding algorithms in Thrill.

In this next section, I will show you how to actually code using these DIA operations. As the title says, we are going to play with DIA operations.

65 - Playing with DIA Operations

In the first section I showed you a Hello World program. We will soon start from that and add some DIA operations.

But how do you figure out the actual C++ syntax?

For that the easiest way is to look at example code from the Thrill repository, or to look at the function definition in the doxygen documentation, which you can find under this link here.

And all of the operations are listed here somewhere, and you can find the full C++ syntax. However, this list is pretty long, for example there are multiple different variants of all of the different functions.

But I believe the first thing to do is to try and guess, because the illustrated documentation is pretty close to what is needed in C++.

66 - Playing with DIA Operations

Okay, how to get your first DIA object?

We already know how to bootstrap everything with the Hello World program.

The only other thing you need to know is that initial DIAs are created from Source operations, and these source operations take the Context as first parameter. This first parameter is actually missing from the illustrations in the last section for simplicity.

But the rule is simple, if no parameter of an operation is a DIA, then the Context has to be passed as first parameter.

So here you see how to add a ReadLines and a Print operation to the Hello World program. It is good practice to add the name of the variable to the Print call.

And since we are going to use this a starting point later, I will now copy the code and compile and run it.

(live-coding demonstration)

67 - Playing with DIA Operations

So now we have a DIA. And with that object you can call other DIA operations. Most operations are methods of the DIA class, like .Sum() or .Print() and so on. But there are also some free functions, like Zip, which take more than one DIA as a parameter.

It is good practice to use auto keyword to save the result of a DIA operation. There is a lot of magic going on in the background which is captured by this auto.

But maybe the most elegant and preferred way is simply to chain the operations with the dot "operator", which immediately calls methods on the resulting temporary DIA handles. This is of course the same as using auto.

68 - Playing with DIA Operations

And some more explanation about these DIAs objects:

The DIAs in the code are actually only handles to the "real" DIAs inside the data flow graph.

This mean you can copy, assign, and return these DIAs without any processing cost. You can pass DIAs as template parameters, which is of course the same as using autos. And again, better use templates instead of explicitly writing DIA<T>.

69 - Playing with DIA Operations

And of course the easiest way to write small functions needed for the many parameters of DIA operations is to use lambdas like you already saw in the WordCount example.

Here it does not matter if you use explicit types or the auto keyword. I use explicit types most of the time, despite them often generating lots of template error messages until you get them right.

70 - Context Methods for Synchronization

And now for some more details on the interface. Besides the DIA operations interface, there are also many methods in the Context class which are useful.

The first you already saw: my_rank() returns the rank or ordinal number of the worker. It is most often used to print some result only once.

But there are many others, which are similar to MPI's collective operations: you can broadcast, prefix sum, and allreduce items. Different from MPI, these methods use Thrill's serialization, which means they automatically work with many different types.

And there is also a Barrier synchronization method, in case you need that, for example for timing.

71 - Serializing Objects in DIAs

I often talk about Thrill's serialization without giving any details. This is because it mostly just works the way you think it should.

The serialization framework automatically works for PODs, plain old data types, which are integers, characters, doubles, floats, structs, and classes containing only PODs. Most importantly, fixed-size classes are PODs, I believe, if they only have the default constructor and not some specific constructor.

Many of the STL classes are also automatically serialized. strings of course, pairs, tuples, vectors, and so on.

The serialization format for all of these is totally trivial. Trivial types are serialized as verbatim bytes. For strings or vectors, the number of characters or items in the vector is prefixed to the actual data. In the end it's all quite simple.

If you run into problems with a class not being serializable, you can add a serialize() method. For more complex classes such as this one which contains a variable length string, we use the cereal framework, that's the cereal as in your morning breakfast.

See the cereal web site for more info. But it is really mostly as simple as this piece of code here: you have a serialize() method, which takes a template Archive, and puts or restores all items of the struct to or from the Archive. That's it, and then this item becomes serializable.

72 - Warning: Collective Execution!

And now one last word of warning, before we jump into some real examples.

Maybe the most important word of warning: in Thrill programs, all workers must perform all distributed operations in the same order!

This is of course because the distributed operations implicitly synchronize their collective execution. This allows the implementations to assume the states the others are in, without communicating at all. This saves communication and latency.

So it is important to remember, that something like the following code piece does not work: Why?

(pause)

Simple: the .Size() method here is run only by the worker with rank 0. That just does not work, because all workers must run all distributed operations.

This is of course a trivial example, but it is actually quite a common pitfall, because Thrill's syntax is so convenient.

73 - Tutorial: Playing with DIAs

Okay, let's get to the tutorial part.

Here are some examples of small tasks you can try to implement in Thrill to get a feeling of the framework, and how to use it.

All are very simple, and you just have to use the previous slides containing the DIA operations, figure out how the actual C++ syntax works, and apply and parameterize a few of them. Very simple stuff.

For the purpose of this YouTube video, I will actually do the third one.

If you try to do them yourself: one word of advice. Use lots of .Print() methods! Just print out the DIAs and figure out what the next step should be.

(live-coding demonstration)

74 - The Thrill Framework: Execution of Collective Operations in Thrill

So in the last section, I gave you an overview of how to actually program in Thrill by detailing how to chain DIA operations to form a DIA data-flow graph.

And I even wrote a simple Thrill program for you and ran it on some input data.

Now we are going to look at more details of the DIA operations and discuss how are they actually executed.

Much of what you saw up to now, could just as well have been written in a functional programming language as list operations, there was only very little details about how everything is run on a distributed system.

75 - Execution on Cluster

But Thrill is of course a distributed framework. Which means it is designed to run on a cluster of machines.

This is implemented in Thrill by compiling everything into one single binary, which is launched on all machines simultaneously.

This one binary uses all the cores of the machine, and attempts to connect to other instances of itself running on other hosts via the network.

This one binary is exactly what we have been compiling in the examples previously. It can be run on a single machine, or on many connected via a network.

This schema is similar to how MPI works: there is only one binary, which is run using mpirun on a cluster. And as in Thrill, flow control is decided via collective coordination using the C++ host language.

For Thrill there are launcher scripts, which can be used to copy and run the binary via ssh or via MPI. More about those in the following on-hand tutorial section.

One word about names: machines are called hosts in Thrill-speak, while individual threads on cores of a host are called workers. We use this terminology to avoid the word "node", especially for network hosts. Cores or threads are also ambiguous.

76 - Example: WordCount in Thrill

For an overview of what actually happens when Thrill is run on a distributed system, let us reconsider the WordCount example from the beginning.

WordCount consists of five DIA operations, and you have seen what each of them does previously in this tutorial.

The first is ReadLines, which creates a DIA of std::string from a set of text files containing each line as a DIA item.

This DIA is immediately pushed into a FlatMap operation, which splits the lines apart into words. The output of the FlatMap is a DIA of Pairs, containing one Pair for each word, namely ("word",1).

In the example, I labeled this DIA word_pairs. That is of course completely unnecessary, and only to show that you can assign DIAs to variable names.

This DIA of Pairs is reduced using ReduceByKey. Remember, the ReduceByKey operation collects all items with the same key and applies the reduction function to combine them. For this we parameterize, the ReduceByKey method with a key extractor, which just takes out the word from the Pair, and a reduction function, which adds together the second component of the Pairs, which in this case is the word counter. The key is obviously the same in a and b inside the reduction function, so we can pick either of them.

The result of ReduceByKey is already the word counts which we want, but to write the result using WriteLines, we have add a Map from Pair to std::string. This is because WriteLines can only write a DIA of std::strings. So this Map operation is actually used to format the Pair as a string.

Yes, and that's it, that is WordCount in Thrill.

As said before, these are just 20 lines of code, excluding the main caller program, which is where the Context comes from, and which you have seen before.

But what I just explained is the functional view of the program. There was nothing parallel or distributed about what I just explained.

Now, let's look at what actually happens when this Thrill program is executed.

ReadLines takes as input a list of text files. These text files are sorted lexicographically and split up evenly among the workers: Thrill looks at their total size in bytes, partitions the bytes or characters evenly, and then searches forward at these places for the next newline.

So the DIA of lines is actually evenly split among the workers. These then execute the FlatMap on each line of the DIA. However, consider for a second how large the DIA of Pairs (words,1) actually is.

It is huge!

But luckily, Thrill never actually materializes DIAs unless absolutely necessary, remember everything is a lazily executed DIA data-flow graph. The DIAs actually never exist, except for some rare exceptions.

So what actually happens is that the text files are read block by block, the lines are split by scanning the data buffer, and each line is immediately processed by the FlatMap. There is even a variant using a string_view, such that the characters aren't even copied from the read buffer into a temporary std::string.

The Pairs (word,1) are equally, immediately processed by the following ReduceByKey operation.

And what is ReduceByKey? It is actually a set of hash tables on the workers: In the first phase, the items are placed in a local hash table and items with the same key, are immediately reduced in the cells of the hash table.

So, again, neither the DIA of lines, nor the DIA of Pairs actually ever exists as an array. The text files are read, split, and immediately reduced into the hash table in ReduceByKey. The hash table is the only thing that uses lots of memory.

And all of this is done in parallel and on distributed hosts.

So after the first phase, each worker has a locally reduced set of word counts. These then have to be exchanged to create global word counts.

This is done in ReduceByKey by transmitting parts of the hash table: the entire hash value space is partitioned equally onto the workers, and because we can assume an equal distribution, each worker gets approximately the same number of keys.

So each worker is assigned a part of the global hash value space, which can actually be done without any communication, and these then receive all keys for that partition from all workers. So each worker transmits almost the entire hash table that it has, excluding the part for himself, but in a pre-reduced form.

On the other side, the items are received from all workers and reduced in a second hash table phase, and again items with the same key are reduced in the cells of the hash table.

Back to the WordCount example. When the second reduce phase is finished, the hash tables in each worker contain an approximately equal number of items.

The following DIA operations consists of the Map from Pair to string and the WriteLines operation. Again, these steps are chained or pipelined, which means each worker scans over the items in its hash table, applies the Map operation item-wise, and pushes each item into a WriteLines buffer. The WriteLines buffer is flushed to disk when full.

And such that all workers can operate in parallel, each worker writes its own text file, which means there will be many, many output files, which concatenated in lexicographic order are the complete result of this program.

On a side note: we used to have a method to write just one big text file, but actually the distributed file systems used on clusters work better when writing many files in parallel than writing only a single file at multiple places at once.

So this is what actually happens when Thrill executes this WordCount program. Each operation is internally implemented using distributed algorithms, and they are chained together using the DIA concept. The DIAs, however, never actually exist, they are only the conceptional "glue" between the operations. The operations themselves determine how the items are stored in memory or on disk.

In the case of WordCount, the ReduceByKey stores the items in a hash table, and possibly spills slices out to disk if it grows too full, more about that later. ReadLines and WriteLines only have a small read or write buffer, and the FlatMap and Map are integrated into the processing chain.

Yes, that was WordCount.

77 - Mapping Data-Flow Nodes to Cluster

Now let's have a closer look at how this processing chain is implemented in Thrill.

On the left here there is an example DIA data-flow graph: a ReadLine, PrefixSum, Map, Zip, and WriteLines. This graph does not have to make sense, it's just an example.

Each distributed operation in Thrill has three phases: a Pre-Op, a Main-Op, and a Post-Op. Each phase is actually only a piece of code.

The Pre-Op receives a stream of items from previous DIA operations and does something with it. For example in PrefixSum each worker keeps a local sum of the items it receives and just stores them.

Once all local items have been read, a global prefix sum of the local sums has to be calculate. This is the Main-Op, and of course requires communication.

This global prefix sum is the offset value each worker needs to add to its own items for the complete prefix sum result to be correct. This of course it done only when the data is needed, which means in the Post-Op or PushData phase.

In this phase, the stored items are read again, the local partial prefix sum is added and further local items are aggregated into the sum. The items calculated in the Post-Op of PrefixSum are then pushed down to further operations.

In this case it is a Map operation, which is easy to apply, and the result of Map is pushed down even further.

Each distributed operation implements different Pre-Op, Main-Op and Post-Ops. In the case of Zip incoming items are only stored in the Pre-Op.

In the Main-Op of Zip the number of stored items is communicated and splitters or alignment indexes are determined. The stored item arrays are then aligned such that all items with the same index are stored on one worker. This can be as expensive a complete data exchange, or only a few items which are misaligned, depending on how balanced the input was. In some sense in Zip the DIA array is actually materialized and then aligned among the workers.

Once the arrays are aligned, the arrays are read again in the Post-Op, the zip function is applied, and the result pushed further down, in this case into WriteLines.

Okay, so in this example we saw how chains of DIA operations are built. Local operations are simply inserted into the chain, while distributed operations consist of Pre-Op, Main-Op, and Post-Ops.

77b - Mapping Data-Flow Nodes to Cluster

From a BSP model view, the Pre-Op, Main-Op, and Post-Ops are in different supersteps, and the lines in between are barriers. These barriers are impossible or very hard to avoid, because in these cases the next phase can only start when all items of the previous phase have been fully processed.

This also means that local operations, such as the Map here do not require barriers.

77c - Mapping Data-Flow Nodes to Cluster

Again, this means these can be integrated into the processing pipeline, and Thrill does this on a binary code level using template meta-programming and lambdas.

To be precise, all local operations before and including a Pre-Op are chained and compiled into one piece of binary code. The previous Post-Op cannot be chained, instead there is one virtual function call in between them, which is this line here. The reason is that new DIA operations have to be able to attach to the Post-Op at run-time.

78 - The Thrill Framework: Tutorial: Running Thrill on a Cluster

So, after these heavy implementation details, let's get back to some hands-on exercises. In this section I will explain how to run Thrill programs on a cluster.

79 - Tutorial: Running Thrill on a Cluster

As mentioned before, Thrill runs on a variety of systems: on standalone multi-core machines, on clusters with TCP/IP connections via Ethernet, or on MPI systems.

There are two basic steps to launching a Thrill program on a distributed cluster: step one is getting the binary to all hosts, and step two is running the program and passing information to all hosts on how to connect to the other instances.

Thrill does this, not read any configuration files, because this would require the files to also be distributed along with the binary, instead Thrill reads environment variables, which are passed by the scripts.

80 - Tutorial: On One Multi-Core Machine

Okay, but before we get to clusters, first more about running Thrill on a single multi-core machine. This is the default startup mode, and you have already used or seen me use it in this video.

This default startup mode is mainly for testing and debugging: the default is that Thrill actually creates two "virtual" test hosts on a single machine, and splits the number of cores equally onto the virtual hosts. These then communicate via local kernel-level TCP stream sockets. This means this setup is ideal for testing everything in Thrill before going onto real clusters.

When Thrill is run, it will always print out a line describing the network system it detected and is going to use. You already saw this line in the first example: Thrill is running locally with 2 hosts and 4 workers per host in a local tcp network.

There are two environment variable to change the parameters. THRILL_LOCAL changes the number of virtual test hosts, and THRILL_WORKERS_PER_HOST changes the number of threads per virtual host. I suggest you try changing these parameters on an example program.

If you want to run Thrill programs on a standalone multi-core machine for production, it is best to use THRILL_LOCAL equals 1, because then all communication between workers is performed internally without going to the kernel.

81 - Tutorial: Running via ssh

This next method is used for launching Thrill programs via ssh.

This method works for plain-old Linux machines which you can access via ssh, for example, I used this for AWS EC2 virtual machines.

For it to work, you must be able to ssh onto the machines without entering a password, so please install ssh keys.

In principle, a shell script is then used to ssh onto all the machines, run the Thrill program simultaneously on all of them and pass the other host names via an environment variable such that they can connect to each other.

For this the Thrill repository contains an "invoke.sh" script. This script takes a list of hosts, optionally a remote user name, and a Thrill binary path.

The script also has many more parameters which you can see by just running it. For example, for the case when the host names used for ssh are different than those needed for connecting directly among the running instances.

However, there is one important flag I would like to mention: "-c", which copies the Thrill binary via ssh to all remote machines. If your cluster is set up to have a common file system, like NFS, Ceph or Lustre, then the binary can be accessed on all machines directly, and the script can just call a full path.

If however, the machines do not share a file system, for example, AWS EC2 instances, then add the "-c" flag and the script will copy the binary onto all machines into the /tmp folder and run it from there.

82 - Tutorial: Running via MPI